For the last two decades, search meant one thing: Google rankings.

Fast forward to today, that assumption is quietly breaking.

ChatGPT, Perplexity, Gemini, and Claude now handle billions of searches every month. Most users still use Google, but they are no longer loyal to a single interface. Discovery has become fragmented across traditional search engines, AI assistants, AI Overviews, and conversational tools that answer questions directly.

What’s changed isn’t search itself, but where it now happens.

And it creates a problem most brands are completely unprepared for. They have no idea how they appear inside AI-generated answers, if they are cited at all, or why competitors keep showing up where they do not.

Welcome to the AI visibility gap.

Table of Contents

- The Parallel Search Reality Nobody Is Measuring

- 3 Core Problems Brands Are Facing

- Why This Is an Evolution, Not a Disruption

- From Rankings to Selection Rates

- Enter the AI Visibility Toolkit by Semrush

- Why Foundational SEO Still Matters More Than Ever

- The AI Visibility Score Concept

- Why Timing Matters More Than Most People Realize

- Practical Use Cases Teams Are Already Solving

- The Bigger Picture

- Ready to See Where Your Brand Actually Shows Up?

The Parallel Search Reality Nobody Is Measuring

Here is the uncomfortable truth: Roughly 95 percent of ChatGPT users still use Google. They did not abandon traditional search. What changed is how discovery actually happens. Users are no longer loyal to a single interface. They move fluidly between tools, stacking them together as part of the same research flow.

A typical buyer today does not follow a clean funnel. Instead, their journey often looks something like this:

- Google a product category to get initial options

- Ask ChatGPT for comparisons, pros and cons, and recommendations

- Use Perplexity to validate sources or check credibility

- Scan Gemini summaries directly inside Google results

This behavior is already normal, especially for software, services, and high-consideration purchases.

Each step subtly shapes brand perception. Some steps result in clicks. Others never send traffic at all. But every step influences which brands feel trustworthy and which ones are ignored entirely.

The problem is that most analytics stacks still report a comforting illusion. Rankings, impressions, clicks. Metrics that worked well when discovery happened in one place.

None of those tell you whether ChatGPT is recommending your competitor instead, or whether your brand is absent from the AI answers shaping the decision before a search result is ever clicked.

3 Core Problems Brands Are Facing

1. The AI Visibility Gap Is Real and Growing

Most brands today have a rough sense of how they perform on Google. They track impressions, clicks, and rankings. They know which pages drive traffic.

The moment a user switches to ChatGPT, Perplexity, Gemini, or Claude, that visibility collapses into a black box.

You do not know:

- Whether your brand is being cited at all

- Which competitors are being recommended instead

- What context your brand appears in, if it appears at all

- Whether AI answers position you as a leader, an alternative, or an afterthought

This is not a hypothetical problem.

Users increasingly ask AI platforms for product comparisons, vendor recommendations, tool shortlists, and buying advice. These systems surface a small set of “trusted” sources. If you are not part of that set, you do not exist in that decision moment.

That is the real risk: being skipped entirely.

What makes this gap dangerous is that most teams do not even realize it exists. They see stable Google traffic and assume visibility is intact, while AI platforms quietly train users to trust different brands.

By the time referral traffic starts showing up in analytics, competitors have already established themselves as default answers.

2. Traditional SEO Breaks Down in Multi-Turn Conversations

Classic SEO was built for single queries.

A user searches, scans ten blue links, clicks one, and leaves.

Conversational AI does not behave that way.

A single session might include:

- An initial exploratory question

- A follow-up comparison

- A narrowing of options

- A trust check

- A final recommendation request

Each turn builds on the previous one. Context compounds. Intent sharpens.

Most content that ranks well on Google is not structured for this reality. It answers one question well, but fails to support the conversation that follows.

That is why brands often see this pattern:

- Their content ranks page one

- AI platforms reference competitors instead

- Follow-up questions never surface their pages

This is not because AI is ignoring SEO. It is because the content is not designed to survive extended dialogue.

Multi-turn optimization requires:

- Clear entity positioning

- Explicit comparisons

- Structured explanations that anticipate follow-up questions

- Content depth that AI systems can confidently build upon

Without adapting to this format, brands remain visible only at the surface level, while AI conversations move on without them.

3. Attribution Blindness Makes Optimization Impossible

Even brands that suspect they have an AI visibility problem cannot prove it.

Existing analytics tools were not built to answer questions like:

- How often is our brand cited by ChatGPT?

- Which competitors dominate AI answers for our core topics?

- Are we gaining or losing presence over time?

- What sentiment surrounds our mentions?

- Which AI platforms drive referral traffic, if any?

As a result, teams rely on anecdotes, screenshots, and manual testing.

Someone asks ChatGPT a question, notices a competitor mentioned, and raises an alarm. Another person gets a different answer and dismisses it.

There is no baseline. No trend data. No competitive benchmark.

This creates a dangerous situation where decisions are made based on fear, hype, or isolated observations rather than evidence.

Without attribution, there is no optimization loop.

You cannot improve what you cannot measure. And right now, most brands are flying blind across the fastest-growing discovery surfaces on the internet.

Why This Is an Evolution, Not a Disruption

Despite the panic cycles on LinkedIn, AI search does not invalidate everything we know about SEO. That framing is lazy and mostly wrong.

The fundamentals still apply. What has changed is where and how those fundamentals get interpreted.

At a base level, AI systems still lean on familiar signals:

- Authority: brands and pages with real backlinks, mentions, and historical credibility are far more likely to be surfaced

- Relevance: content still needs to clearly answer the question being asked, not vaguely gesture at it

- Entity clarity: AI systems need to understand who you are, what you do, and how you relate to a topic

None of that disappeared.

What changed is the consumption layer. Instead of those signals being used to rank ten blue links, they are now used to select a small number of sources that an AI system is willing to trust and synthesize into an answer.

That distinction matters.

Search engines were built to rank options. AI systems are built to reduce options. They compress the web into a handful of recommendations and explanations, often without showing users where the information came from unless asked.

So the goal is no longer to “beat” nine other results. The goal is to be included at all.

From Rankings to Selection Rates

Traditional SEO was optimized around a familiar scoreboard. Positions one through ten. Incremental gains. Ranking reports that showed movement week over week.

AI search changes the rules of that scoreboard.

There is no page one. There is no page two. There is a selection set, and then there is everything else.

If your brand does not make that set, it does not exist in that conversation, regardless of how well you rank on Google for the same topic.

This is why different signals suddenly matter more:

- Citation frequency: how often your brand or content is referenced across AI-generated answers

- Context quality: whether your brand appears as a primary recommendation, a secondary mention, or a throwaway reference

- Sentiment and framing: how AI systems describe your brand when they include it

These are not abstract ideas. They directly influence which brands users trust when AI systems summarize the market for them.

It is also why traditional SEO tools start to feel insufficient. They were never designed to answer questions like:

- How often does ChatGPT surface my brand for a specific topic?

- Which competitors consistently appear in AI answers while I do not?

- What follow-up questions remove my brand from consideration?

- How does my brand’s positioning differ across ChatGPT, Perplexity, and Gemini?

Without visibility into those answers, optimization stalls. You can keep improving rankings and still lose mindshare where decisions are increasingly shaped.

That’s the real shift. SEO fundamentals still matter, but the leverage has moved from ranking higher to being chosen at all.

Enter the AI Visibility Toolkit by Semrush

This is where

Instead of trying to bolt AI metrics onto traditional rank tracking,

The AI Visibility Toolkit is built specifically for this new discovery layer. It assumes that AI platforms are not just another SERP feature, but an entirely different way users encounter brands. That distinction matters, because optimization only works when measurement reflects reality.

Rather than forcing old SEO frameworks onto AI, the toolkit starts with a simpler question: How do AI systems actually surface brands today?

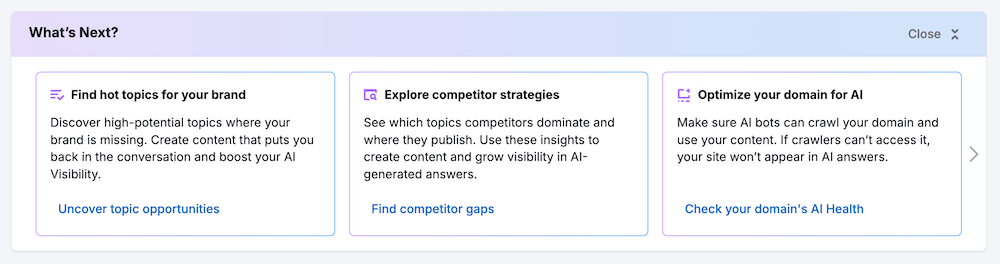

How Semrush ’s AI Visibility Toolkit Solves the Core AI Discovery Problem

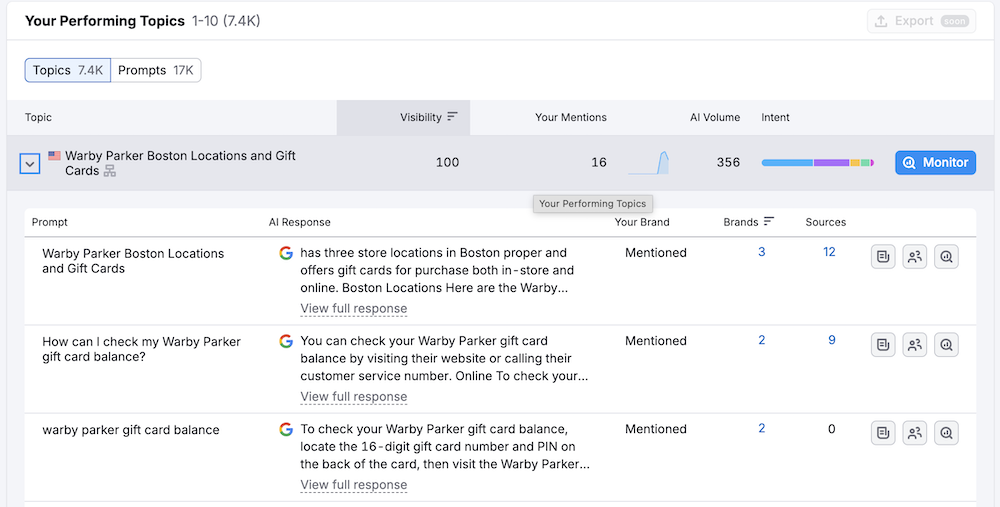

1. Measure Where You Actually Appear

Most teams assume they know where they show up online. In reality, they only know where they rank on Google.

The AI Visibility Toolkit closes that gap by tracking how your brand appears across the platforms that increasingly shape discovery:

- ChatGPT

- Perplexity

- Gemini

- Google AI-driven surfaces

Instead of isolated screenshots or manual prompts, you get a consistent view of brand mentions, citation frequency, and sentiment across these systems.

For the first time, you can see whether AI platforms recognize your brand at all, how often they reference it, and in what context. That alone changes the conversation from speculation to evidence.

2. Understand Multi-Turn Visibility Gaps

Ranking for a keyword does not guarantee visibility inside an AI conversation.

The toolkit makes this painfully clear by showing which questions your brand appears for and, just as importantly, where it disappears while competitors remain present.

This matters because AI interactions rarely stop at a single prompt. Users ask follow-up questions. They refine intent. They compare options.

By mapping visibility across related questions, you can identify:

- Topics where your brand enters the conversation early but drops out later

- Competitors that consistently survive deeper follow-ups

- Gaps where content exists but lacks the depth or clarity AI systems rely on

That insight makes it possible to restructure content around conversational depth and continuity, not just keyword matching. It shifts optimization from chasing rankings to supporting real decision paths.

3. Replace Guesswork With Attribution Signals

Right now, most teams rely on intuition when it comes to AI visibility. Someone notices a competitor mentioned in ChatGPT. Another person gets a different answer and shrugs it off.

The AI Visibility Toolkit replaces that noise with actual signals.

Instead of vague trend watching, you get data around:

- Citation frequency over time

- Competitive presence across AI platforms

- Topic associations that AI systems consistently link to your brand

- Areas where competitors are gaining ground before traffic shifts become visible

This creates something most teams do not have today: a feedback loop.

You can measure. You can adjust. You can see whether changes improve visibility instead of hoping they do.

For the first time, AI visibility stops being a blind spot and becomes something you can actively manage.

Why Foundational SEO Still Matters More Than Ever

As AI platforms become part of everyday discovery, it is tempting to assume they operate on an entirely new set of rules. In practice, they still depend heavily on the same signals that have shaped search visibility for years.

When AI systems decide which brands to reference, cite, or summarize, they look for evidence that a source is credible and worth trusting. That evidence comes from familiar places: backlinks, brand mentions, topical depth, technical quality, and clear signals about what a site is actually about.

This is why

Two areas tend to have an outsized impact.

- Organic Research helps identify which competitors and content formats repeatedly show up in AI-generated answers. This goes beyond ranking analysis and starts revealing which sources AI systems lean on as reference material.

- Keyword Magic Tool surfaces question-based and long-tail queries that resemble how people actually interact with AI tools. These prompts reflect curiosity, comparison, and evaluation, not just transactional intent.

Strong fundamentals still do the quiet work in the background:

- Solid technical SEO ensures content can be accessed and interpreted without friction

- Clear entity signals help AI systems understand who you are and where you fit within a topic

- Broad, coherent topical coverage increases the chance that your content remains relevant as conversations evolve

AI visibility builds on this groundwork. Brands that already demonstrate clarity and depth are far more likely to be cited consistently, especially as AI interactions move beyond simple, one-off questions.

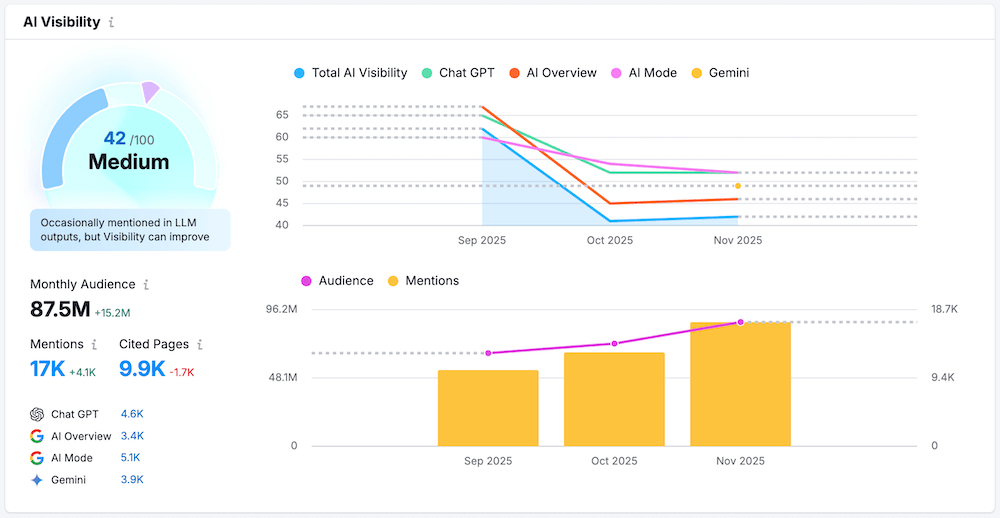

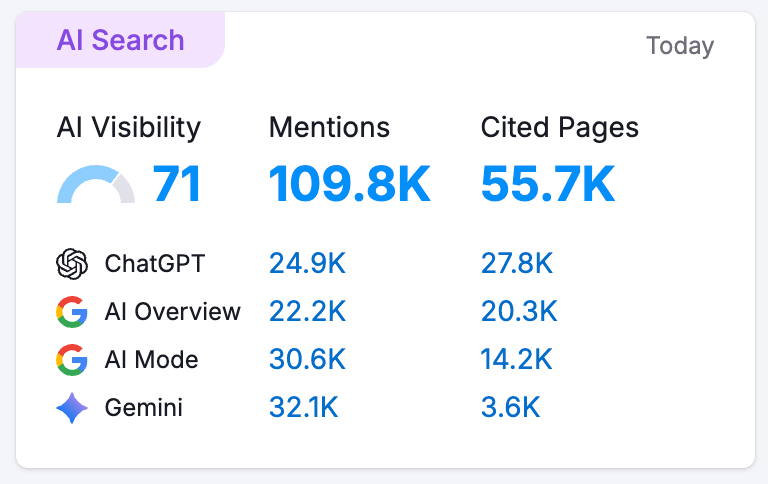

The AI Visibility Score Concept

One of the biggest obstacles teams face right now is fragmentation.

Visibility is measured in pieces. Rankings live in one dashboard. AI Overviews are checked manually. Chatbot mentions are discovered through ad hoc testing. None of this reflects how people actually research today.

A more useful approach is to step back and look for a single signal that captures overall presence across platforms.

That’s where the idea of an AI Visibility Score comes in.

Rather than focusing on individual rankings or isolated mentions, the score represents how often a brand appears across the discovery surfaces that now matter:

- Traditional search results

- Google AI Overviews

- ChatGPT

- Perplexity

- Gemini

This shifts the question from “Where do we rank?” to “How visible are we when people research this topic across tools?”

That distinction matters because users do not experience search in silos. They move between platforms fluidly, often without caring which system delivered the answer. What sticks is whether a brand shows up repeatedly and in a credible context.

An AI Visibility Score reflects that reality more accurately than any single ranking report. It provides a way to understand share of presence across the modern discovery landscape, not just performance inside one interface.

Why Timing Matters More Than Most People Realize

AI systems do not treat every source equally from day one. They build confidence gradually, based on repeated exposure to the same brands and domains across similar questions.

When a brand starts getting cited early for a topic, that association tends to stick. Each additional citation reinforces the model’s confidence that the brand belongs in that context. Over time, this creates a compounding effect where the same sources are surfaced again and again.

This is why timing matters more than most teams expect.

Practitioners increasingly refer to a 72-hour citation window to describe how quickly early signals begin shaping future visibility. Brands that show up early in AI answers often establish a durable presence. Brands that wait are forced to displace competitors who are already embedded in those responses.

Connor Gillivan, an SEO and generative search strategist who studies how brands surface across Google, ChatGPT, and other AI platforms, put it bluntly:

“ChatGPT, Claude, Gemini, Perplexity… they’re not just tools. They’re the new search engines. If your brand isn’t visible there, you’re already invisible to tomorrow’s customers.”

The longer AI visibility goes unmeasured, the more ground is lost quietly. There is no traffic dip to trigger alarm. No ranking drop to investigate. Visibility erodes before teams even realize it was there to lose.

Practical Use Cases Teams Are Already Solving

For many teams, AI visibility started as a curiosity. It quickly turned into a set of very practical problems that existing tools could not answer.

Teams are already using AI visibility data to solve issues like:

- Monitoring brand sentiment inside AI-generated answers, especially for comparison and recommendation queries where framing matters as much as inclusion

- Benchmarking competitors across AI platforms to understand who consistently appears in answers and who is being ignored

- Identifying content gaps that block AI citations, particularly in follow-up questions where brands tend to drop out

- Reducing reliance on a single discovery channel by understanding where AI-driven visibility supplements or replaces traditional search exposure

- Proving ROI from generative engine optimization efforts by tying visibility changes to referral traffic, brand demand, and assisted conversions

These are not abstract experiments. They affect how budgets are allocated, which content gets prioritized, and how leadership teams assess risk.

Without visibility into these areas, teams are left reacting to symptoms rather than addressing causes. That is what turns AI discovery into an operational blind spot rather than a manageable channel.

The Bigger Picture

Search still exists, but it now happens across multiple interfaces instead of a single results page.

Discovery now happens across traditional results, AI summaries, conversational tools, and hybrid interfaces that blur the line between search and recommendation. Users move between these surfaces naturally, often without noticing the transition.

The brands that perform well in this environment are not choosing sides. They are building presence across systems that complement one another.

The discovery pie is getting larger, not smaller. But it is also becoming harder to measure with legacy frameworks.

Visibility without measurement is no longer a viable strategy. Not when AI platforms increasingly shape how customers discover options, compare alternatives, and decide which brands feel credible.

Tracking AI visibility is not about chasing the next trend. It is about restoring clarity in a search landscape that no longer fits into a single dashboard.

And for most brands, it is long overdue.

Ready to See Where Your Brand Actually Shows Up?

AI platforms are already shaping how customers discover and trust brands. Visibility without measurement is no longer a strategy.

Use Semrush’s AI Visibility Toolkit to track your brand’s presence and citations across ChatGPT, Perplexity, Gemini, and Google AI and see exactly where you stand in the new discovery landscape.

Because if you are not measuring AI visibility with